Affiliation:

1Department of Medical Laboratory Science, Neuropsychiatric Hospital, Abeokuta 110101, Nigeria

2Faculty of Medicine, Department of Public Health and Maritime Transport, University of Thessaly, 38221 Volos, Greece

ORCID: https://orcid.org/0000-0002-3809-4271

Affiliation:

1Department of Medical Laboratory Science, Neuropsychiatric Hospital, Abeokuta 110101, Nigeria

3Department of Medical Laboratory Science, College of Basic Health Sciences, Achievers University, Owo 341104, Nigeria

Email: uthmanadebayo85@gmail.com

ORCID: https://orcid.org/0009-0000-2000-7451

Affiliation:

4Royal (Dick) School of Veterinary Medicine, University of Edinburgh, EH25 9RG Scotland, United Kingdom

5YouthRISE Nigeria, Abuja 900246, Nigeria

ORCID: https://orcid.org/0009-0007-9371-0915

Affiliation:

6Department of Public Health, Faculty of Allied Health Sciences, Kwara State University, Malete 241104, Nigeria

ORCID: https://orcid.org/0009-0008-6684-6224

Affiliation:

7Department of Medicine and Surgery, Ladoke Akintola University of Technology, Ogbomoso 210214, Nigeria

ORCID: https://orcid.org/0000-0002-3821-2143

Affiliation:

8KIT Royal Tropical Institute, Vrije University, 1090 HA Amsterdam, The Netherlands

ORCID: https://orcid.org/0009-0008-0874-6487

Affiliation:

9Department of Medical Laboratory, Osun State College of Health Technology, Ilesa 230101, Nigeria

ORCID: https://orcid.org/0000-0001-7070-276X

Affiliation:

1Department of Medical Laboratory Science, Neuropsychiatric Hospital, Abeokuta 110101, Nigeria

10Department of Medical Laboratory Science, McPherson University, Seriki Sotayo 110117, Nigeria

ORCID: https://orcid.org/0000-0003-3587-9767

Affiliation:

11Department of Global Health and Development, Faculty of Public Health and Policy, London School of Hygiene and Tropical Medicine, WC1E 7HT London, United Kingdom

12Center for University Research, University of Makati, Makati City 1215, Philippines

13Research Office, Palompon Institute of Technology, Palompon 6538, Philippines

ORCID: https://orcid.org/0000-0002-2179-6365

Explor Digit Health Technol. 2025;3:101174 DOI: https://doi.org/10.37349/edht.2025.101174

Received: August 21, 2025 Accepted: November 05, 2025 Published: November 18, 2025

Academic Editor: Subho Chakrabarti, Postgraduate Institute of Medical Education and Research (PGIMER), India

The integration of artificial intelligence (AI) into psychiatric care is rapidly revolutionizing diagnosis, risk stratification, therapy customization, and the delivery of mental health services. This narrative review synthesized recent research on ethical issues, methodological challenges, and practical applications of AI in psychiatry. A comprehensive literature search was conducted with no limitation to publication year using PubMed, Scopus, Web of Science, and Google Scholar to identify peer-reviewed articles and grey literature related to the integration of AI in psychiatry. AI enhances early identification, predicts relapses and treatment resistance, and facilitates precision pharmacopsychiatry by leveraging data from machine learning, natural language processing, digital phenotyping, and multimodal data integration. This review highlights the advancements in the integration of AI in psychiatric care, such as chatbot-mediated psychotherapy, reinforcement learning for clinical decision-making, and AI-driven triage systems in resource-constrained environments. However, there are still serious concerns about data privacy, algorithmic bias, informed consent, and the interpretability of AI systems. Other barriers to fair and safe implementation include discrepancies in training datasets, underrepresentation of marginalized groups, and a lack of clinician preparedness. There is a need for transparent, explainable, and ethically regulated AI systems that enhance, rather than replace, human decision-making. A hybrid human-AI approach to psychiatry is recommended to address these limitations, while interdisciplinary studies, strong validation frameworks, and inclusive policymaking are needed to guarantee that AI-enhanced mental health treatment continues to be effective, fair, and reliable.

Mental health is a crucial component of overall well-being, defined by the World Health Organization (WHO) as a state where individuals can recognize their abilities, handle daily stresses, work productively, and contribute to their communities [1, 2]. A mentally healthy person is emotionally, psychologically, and socially stable, reflected by a sense of contentment and control over their environment [3]. Despite this, mental health remains largely unmet for many, with approximately 450 million people suffering from mental illness globally [4]. Mental disorders have substantial economic and public health implications worldwide. WHO estimates that they contribute to 14% of the global burden of disease (GBD) [5]. According to the 2019 GBD, approximately 5% which is about 125 million cases, were directly attributed to mental disorders, increasing to 12% when including related issues such as substance use, neurological disorders, chronic pain, and self-harm [2, 6]. When considering compositional approaches, mental disorders account for 16% of disability-adjusted life years, with an additional 97 million cases [2]. Despite growing awareness, psychiatry continues to face major challenges in early detection, accurate diagnosis, and equitable access to care. Diagnostic decisions often rely on subjective clinical interpretation rather than objective biomarkers, and treatment responses vary widely across individuals [7]. These limitations highlight the need for innovative, data-driven strategies that can enhance diagnostic precision, personalize therapy, and expand access to mental health services.

Artificial intelligence (AI) is reshaping healthcare across both preventive and curative domains [8]. With a reported disease detection accuracy of 94.5% in 2023, its potential is clear, though integration is hindered by mistrust, opaque outputs, privacy concerns, significant technical, ethical, regulatory, and logistical barriers, particularly in low- and middle-income countries (LMICs) [8]. Despite these challenges, AI continues to emerge as a powerful force linking technology and medicine through early diagnosis and treatment. In psychiatry, AI is increasingly applied to address shortages of trained professionals and limited access to care. Its uses include improving early detection and diagnosis by analyzing speech, neuroimaging, and behavioral data, thereby enhancing accuracy and timely interventions [9, 10]. AI also supports personalized treatment planning, predicts disease progression, and facilitates real-time symptom monitoring for disorders such as depression, schizophrenia, autism spectrum disorder (ASD), addiction, and sleep disturbances [9, 10].

In addition, AI-powered chatbots and digital interventions provide scalable psychoeducation, therapy, and emotional support, extending mental health services beyond traditional clinical settings through online platforms, smartphone applications, and digital games [11]. These tools offer coping strategies, assist with communication, and deliver ongoing support, particularly valuable in resource-limited contexts [11]. In academic psychiatry, AI accelerates data analysis, systematic reviews, and trend identification, thereby enhancing scientific discovery and the dissemination of knowledge [12]. This review explores how AI and predictive analytics are reshaping psychiatric diagnostics and treatment, suggesting a paradigm shift from reactive to predictive models.

We conducted this narrative literature review to critically examine current advancements in the application of AI to the diagnosis and management of psychiatric disorders. Emerging trends in AI-driven diagnostics, predictive analytics, customized therapy, digital phenotyping, and ethical implementation frameworks were given special attention. A comprehensive literature search was conducted in PubMed, Scopus, Web of Science, and Google Scholar to identify peer-reviewed articles and grey literature on the integration of AI in psychiatry. No publication year limits were applied, although recent studies were prioritized to ensure data currency. The search terms used included “artificial intelligence,” “machine learning,” “psychiatry,” “mental health,” “digital phenotyping,” “neuroimaging,” “biomarkers,” “predictive analytics,” “precision psychiatry,” “algorithmic bias,” and “clinical decision support”. The inclusion criteria comprised studies published in English that addressed the use, development, or evaluation of AI methods in psychiatric research, diagnosis, or clinical care. Eligible literature included original research articles, systematic or narrative reviews, meta-analyses, policy papers, perspectives, and relevant grey literature. The exclusion criteria included non-English publications, duplicate reports, and studies that focused solely on neurological disorders or computational model development without psychiatric relevance. Articles that did not align with the review’s objective or lacked substantive discussion of AI applications in psychiatry were also excluded. To find common themes and recurring issues throughout the review, an iterative thematic analysis was utilized. Although this review does not attempt to be systematic, it makes an effort to present a cutting-edge synthesis that emphasizes both the potential and drawbacks of AI in the field of psychiatry. The implications for clinical workflow integration, equity in access and outcomes, transparency in AI design, and interdisciplinary methods all received particular focus.

Diagnosing psychiatric disorders remains a complex and subjective endeavor within medicine [13]. Unlike other specialties that rely on biomarkers or imaging, psychiatry still depends largely on patient self-reports, clinical observations, and interpretive judgment. Conditions such as depression, anxiety, and post-traumatic stress disorder (PTSD) often exhibit overlapping symptoms such as disturbed sleep, attention deficits, and emotional instability, making it difficult to distinguish one disorder from another. As a result, comorbidity is common, with individuals frequently meeting criteria for multiple diagnoses simultaneously [14]. Although classification systems like the Diagnostic and Statistical Manual of Mental Disorders and International Classification of Diseases, 11th Revision were developed to promote diagnostic consistency and guide treatment and research, they face increasing scrutiny [15]. Clinicians often apply these categories flexibly during consultations, and overly rigid use can reduce assessments to mechanical checklists, neglecting individual experiences [16]. Furthermore, most psychiatric diagnoses still lack clearly defined biological markers [14]. The effectiveness of certain treatments across a range of diagnoses, such as the use of selective serotonin reuptake inhibitors for depression, anxiety, and binge-eating disorder, raises questions about whether current diagnostic categories represent distinct entities or overlapping manifestations of shared underlying mechanisms [14].

The biopsychosocial model is intended to integrate biological, psychological, and environmental factors, but in practice, it is often inconsistently applied. While the model’s breadth allows for a comprehensive understanding of mental distress, its all-encompassing scope can hinder diagnostic clarity and treatment precision. Changes to diagnostic definitions, such as those for ASDs, have occasionally restricted access to necessary services for individuals with clear functional impairments [14]. These diagnostic challenges are compounded by the global treatment gap and the persistent disconnect between research and clinical practice. Even when effective treatments exist, access remains highly uneven, especially in low-resource settings. Evidence-based therapies are either unavailable or not implemented following evolving clinical evidence [14]. These issues highlight the need for more individualized, evidence-driven, and accessible models of care [14, 16].

Delays in accessing mental health care remain widespread in LMICs, where services are frequently centralized in a few urban psychiatric facilities. In such regions, treatment gaps for depression and anxiety exceed 85%, while over 90% of individuals with psychotic disorders in sub-Saharan Africa and parts of South Asia receive no formal care [17, 18]. These delays contribute to worsening symptoms, chronicity, and increased disability. Once treatment begins, the prevailing pharmacological approach often relies on trial-and-error. Clinicians start with standard medications and adjust based on patient response, a process that can take months. Only 30–40% of patients experience remission after the first antidepressant trial [19]. While augmentation strategies and newer techniques like transcranial magnetic stimulation offer benefits, they are generally limited to high-resource settings [20].

The absence of reliable biomarkers and predictive tools further complicates treatment. Innovative care models are needed to bridge these gaps. Chile’s Regime of Explicit Health Guarantees, which integrates pharmacological and psychosocial interventions at the primary care level, has improved access and outcomes [21]. In Zimbabwe, the Friendship Bench program trains lay health workers to deliver psychological support, expanding mental health coverage in a cost-effective way [22]. Such initiatives demonstrate how scalable, community-based solutions can reduce delays and improve treatment outcomes, bringing care closer to personalized, evidence-informed standards. However, ensuring sustainable funding and equitable implementation across diverse populations remains a major challenge [23]. Stigma and systemic inequities continue to shape access to mental health care. Structural stigma reflected in policy neglect, underfunding, and institutional bias exacerbates existing disparities. Marginalized groups, including ethnic minorities, LGBTQ+ individuals, and those in poverty or conflict zones, face compounded barriers to diagnosis and treatment. Cultural stigma may discourage care-seeking, while economic and geographic limitations render services inaccessible. Eliminating stigma is not a secondary issue but a fundamental requirement for achieving mental health equity [24]. Yet stigma, inequity, and the persistent lack of personalized diagnostic tools highlight the urgent need for innovative, data-driven approaches to psychiatric care. Traditional methods, though valuable, remain constrained by subjective assessment, limited scalability, and uneven access across populations [25]. Recent advances in computational science have introduced the possibility of integrating large-scale biological, psychological, and behavioral data to generate more objective insights into psychiatric conditions. Within this evolving landscape, AI and its subfields offer powerful means to address long-standing diagnostic and treatment challenges by identifying complex, multidimensional patterns that elude conventional analysis [26].

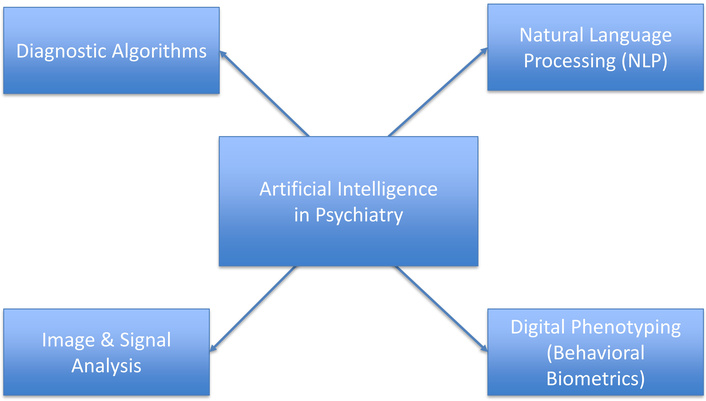

AI and its subfields, machine learning (ML), deep learning (DL), and natural language processing (NLP), enable computers to learn from clinical, behavioral, and linguistic data to uncover patterns that support diagnosis and treatment [27]. These models are typically developed through training, validation, and testing processes that help optimize performance and ensure dependable results in clinical applications. By combining these learning methods, AI enhances the capacity to interpret complex data and generate insights that complement professional judgment [28]. AI is reshaping many areas of healthcare, including psychiatry, with the goal of improving diagnostic accuracy, efficiency, and early detection of mental health conditions. Its application in psychiatry spans four main domains: diagnostic algorithms, NLP, image and signal analysis, and digital phenotyping through behavioral biometrics (Figure 1) [29]. AI-driven diagnostic tools are transforming psychiatric evaluations by offering standardized, data-based assessments. Using ML models, these systems analyze patient data to identify patterns and predict mental health disorders. Algorithms such as neural networks, support vector machines, and decision trees have been trained on clinical datasets to detect conditions like bipolar disorder, schizophrenia, and depression [30]. One key advantage of these tools is their ability to capture complex, nonlinear relationships that clinicians might overlook. Studies, including those from the Predictive Analytics Competition, demonstrate how integrating data on mood, cognition, and sleep can enhance the accuracy of diagnosing major depressive disorder, outperforming traditional methods [31]. These systems can also reduce diagnostic bias and offer consistent second opinions, though their reliability is tied to the quality and representativeness of the training data, which poses challenges for underrepresented populations [32].

Key domains of AI in psychiatric diagnosis. Illustration generated using Microsoft PowerPoint based on data from Lee et al., 2021 [29]. AI: artificial intelligence.

NLP, a branch of AI, plays a crucial role in analyzing unstructured text from diverse sources such as electronic health records (EHRs), therapy transcripts, and social media. By processing clinical notes, NLP algorithms can identify key mental health indicators, including signs of depression, anxiety, and suicidal ideation. Subtle linguistic cues in EHRs often hint at deteriorating mental states, and NLP systems can detect these early signals. For instance, sentiment analysis and topic modeling have helped identify PTSD and schizophrenia risks in veterans [33]. Outside clinical settings, platforms like Twitter and Reddit have been mined for linguistic patterns linked to depression, such as reduced social interaction, frequent use of first-person pronouns, and negative emotional language [34]. AI is also advancing the analysis of neuroimaging and electrophysiological data to identify biomarkers for psychiatric disorders. Techniques such as structural and functional magnetic resonance imaging, as well as electroencephalogram (EEG), produce complex datasets that ML models can interpret to detect abnormalities associated with schizophrenia, attention-deficit/hyperactivity disorder, and depression [35]. For example, convolutional neural networks have achieved up to 80% accuracy in distinguishing schizophrenia patients from healthy individuals based on brain connectivity patterns [36]. Similarly, EEG data processed through AI can reveal abnormal brain wave activity linked to mood and anxiety disorders. These tools not only support diagnosis but also monitor treatment effectiveness by tracking changes in brain function following interventions like medication or cognitive-behavioral therapy (CBT) [37].

Digital phenotyping, which involves collecting behavioral data through smartphones and wearable devices, enables continuous monitoring of mental health. AI algorithms evaluate inputs such as movement patterns, phone usage, typing speed, sleep behavior, and speech characteristics to detect early signs of psychiatric conditions [9]. For instance, decreased mobility or irregular sleep tracked via smartphones may signal a depression relapse. Similarly, behavioral biometrics such as facial expressions, voice tone, and keystroke dynamics have shown promise in identifying early signs of psychosis and bipolar disorder [38]. These technologies allow for early intervention before acute episodes occur, shifting psychiatry toward proactive rather than reactive care.

Predictive analytics is reshaping mental healthcare by enabling clinicians to identify individuals at heightened risk for adverse outcomes. Through data-driven risk stratification, predictive models categorize patients based on their likelihood of experiencing events such as suicide, relapse, or the emergence of psychiatric symptoms. Leveraging tools like ML, wearable devices, EHRs, and social media data, these models facilitate early detection and personalized interventions. Key applications include identifying prodromal symptoms, forecasting relapse or treatment resistance, and assessing suicide risk [39]. Suicide can lead to death, and conventional assessments often fail to identify those at immediate risk. Predictive models built on structured EHR data incorporating demographic profiles, medication history, hospitalizations, and psychiatric diagnoses have shown greater precision than clinical judgment alone in detecting risk [40]. For example, the United States Army’s Study to Assess Risk and Resilience in Servicemembers demonstrated that predictive analytics could identify high-risk individuals, with over 50% of suicides occurring in the top 5% of predicted risk cases [41]. NLP applied to clinicians’ notes further enhances risk assessment by detecting subtle cues like pessimism, which are often absent from diagnostic codes [42]. Beyond clinical settings, social media offers valuable behavioral data. AI models analyzing language patterns, emotional tone, and posting behavior have identified users at elevated suicide risk, presenting opportunities for earlier outreach [43].

Mental health conditions such as schizophrenia, bipolar disorder, and depression often involve recurring episodes. Predictive analytics can anticipate these relapses and help identify individuals unlikely to respond to standard treatments. By analyzing continuous inputs from wearable sensors, self-reports, and longitudinal EHRs, AI models can flag early signs of clinical deterioration before it becomes severe [30]. Behavioral and physiological indicators like disrupted sleep, reduced physical activity, or vocal pattern changes captured through smartphones have also proven effective in forecasting depressive relapses, enabling passive and nonintrusive monitoring. Prediction tools have also been developed to assess the likelihood of treatment resistance in disorders like schizophrenia, incorporating factors such as early symptom severity, substance use history, and age of onset. Early identification of resistance allows clinicians to promptly introduce alternatives like clozapine, improving patient outcomes [39]. ML is also being used to personalize treatment, with decision-tree models suggesting antidepressants based on patient history, comorbidities, and genetic factors, thereby minimizing side effects and reducing trial-and-error prescriptions [44, 45]. Prodromal symptoms, early warning signs of psychiatric disorders, are key targets for predictive analytics. By integrating clinical, genetic, and cognitive data, ML models such as those from the North American Prodrome Longitudinal Study can predict conversion to psychosis within two years with up to 80% accuracy [46]. Speech and language irregularities, which are hallmarks of prodromal states, have also been successfully analyzed using NLP. Reduced coherence, use of neologisms, and unusual speech patterns have been shown to predict psychosis onset with high reliability, offering non-invasive, scalable screening methods [47].

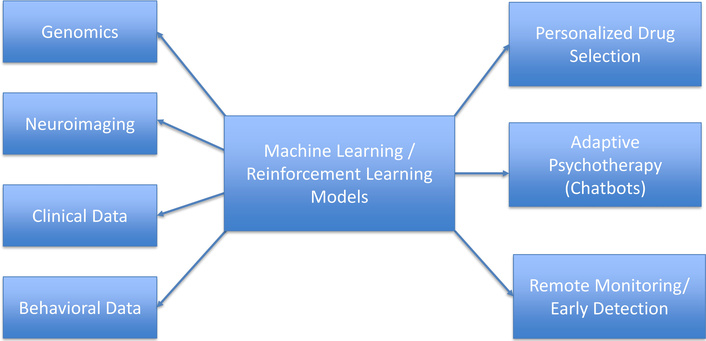

AI is transforming psychiatry by enabling personalized, data-driven treatment strategies that move beyond traditional trial-and-error approaches. In pharmacopsychiatry, ML models integrate genetic, neuroimaging, and clinical data to predict drug responses, optimize dosing, and reduce side effects (Figure 2) [48]. Pharmacogenomic markers such as cytochrome P450 polymorphisms guide the selection of antidepressants and antipsychotics, while AI-powered polygenic risk scores combine genomic and clinical inputs to anticipate adverse effects or therapeutic success [49]. These approaches show particular promise in treatment-resistant depression, though challenges remain in harmonizing data, ensuring equitable representation, and translating findings into routine practice [50]. AI is also advancing psychotherapy through adaptive digital tools. Chatbots like Woebot and Wysa, grounded in CBT, provide real-time, scalable support by tailoring interventions such as journaling prompts and cognitive reframing to user input (Figure 2) [51]. Over time, these tools track emotional trends, allowing them to dynamically adjust therapeutic strategies. They reduce barriers like stigma, cost, and limited access, especially in underserved settings. Despite concerns over data privacy, algorithmic bias, and limited human empathy, evidence suggests that such systems complement traditional therapy by improving accessibility and engagement [52].

Beyond therapy, AI-driven digital therapeutics and remote monitoring platforms leverage data from wearables and smartphones to detect early warning signs of mental health decline. By analyzing speech patterns, sleep cycles, heart rate, and movement, these systems predict depressive episodes or schizophrenia symptom severity, enabling timely interventions such as nudges, referrals, or micro-interventions [53, 54]. In clinical decision-making, reinforcement learning (RL), a branch of AI, is reshaping how treatment plans evolve. Unlike static models, RL learns through interaction and feedback, aligning well to optimize patient outcomes. In psychiatry, RL-based systems have been designed to personalize depression treatments by considering symptom trajectories and patient responses when selecting subsequent therapeutic steps [55]. These models are particularly effective in managing complex conditions such as PTSD or bipolar disorder by balancing short-term relief with long-term recovery. Additionally, RL can refine the delivery of CBT by adapting modules to user engagement and emotional state. Though still emerging, RL-enhanced decision support tools hold promise for delivering adaptive, real-time mental health care that evolves alongside the patient [56].

The integration of AI into psychiatric practice and research raises significant ethical, legal, and equity concerns. Unlike general medical records, psychiatric data often contain highly sensitive information, including vocal inflexions, emotional states, behavioral patterns, and even social media content—domains that remain deeply stigmatized. The ethical complexity deepens with passive data collection methods via smartphones, biosensors, and digital phenotyping, which introduce new avenues for gathering and analyzing personal information. Ensuring rigorous safeguards is therefore essential to protect individual dignity and trust [57]. AI tools in psychiatry, especially those utilizing ML and NLP, often rely on datasets that are either too small or insufficiently diverse, limiting their reliability and inclusivity [29]. Even when comprehensive datasets are developed, the risk of re-identifying individuals remains high, particularly when combining data types such as genomic information, clinician notes, and real-time behavioral assessments [58]. Conventional one-time consent procedures are inadequate in such dynamic environments. In response, adaptive consent models have been proposed to allow participants to update their data-sharing preferences over time, although these remain underutilized in psychiatric research [59].

Bias in AI algorithms further complicates efforts toward mental health equity. AI systems trained primarily on data from wealthier or majority populations often perform poorly when applied to marginalized groups, including Black, Indigenous, and LGBTQ+ individuals. This underrepresentation not only risks diagnostic inaccuracies but also fosters over-surveillance, thereby deepening existing disparities [56, 60]. While global organizations such as the WHO and Organization for Economic Co-operation and Development have proposed broad ethical principles like fairness, transparency, and accountability, these frameworks often lack enforceable mechanisms and fail to address the unique nuances of psychiatric care [61, 62]. Stronger regulatory measures, such as mandatory algorithmic audits, robust data protection standards, and the involvement of diverse stakeholders, including patient advocates, are urgently needed. Recent evidence underscores the persistence of algorithmic bias. A 2025 study comparing ChatGPT, Gemini, Claude, and NewMes-v15 found significant variation in psychiatric assessments depending on racial cues in prompts. Notably, NewMes, developed for a specific regional context, demonstrated the greatest degree of racial bias, while Gemini exhibited the least [63]. These findings align with earlier reports showing systematic misrepresentation of marginalized groups, such as the overdiagnosis of schizophrenia in Black men and the underdiagnosis of depression in women [64, 65]. Even linguistic variations such as African American Vernacular English can trigger biased algorithmic responses, raising concerns not only about diagnostic equity but also about therapeutic empathy [66]. Although increasing model size can reduce bias, it does not fully resolve these issues. Meaningful progress requires transparent data sources, demographic fairness audits, and clinician training to identify and mitigate algorithmic disparities. Without these safeguards, AI risks perpetuating the very inequities it seeks to address, undermining both the promise of technological innovation and the ethical foundation of psychiatric care. Furthermore, AI tools in psychiatry should not be used for self-diagnosis or unsupervised interpretation. Automated assessments can oversimplify the complex biological, psychological, and social dimensions of mental illness, increasing the risk of misjudgment or harm. Accurate diagnosis and treatment require the contextual understanding and ethical oversight of qualified mental-health professionals [67]. Ensuring that AI applications function under professional supervision is therefore vital to safeguard patients, maintain clinical integrity, and uphold the ethical standards of psychiatric care.

The successful application of AI in mental health care depends not only on technical accuracy but also on its seamless integration into daily clinical operations and interoperability with EHR systems. Evidence shows that FHIR-based ML systems can enhance clinical decision-making without disrupting practitioner workflows [68]. Real-world implementations confirm this finding: For example, a DL model embedded in clinical documentation workflows reduced entry time significantly, but only after repeated refinements to address alert fatigue and align with existing practices [69, 70]. These adjustments were essential to building clinician trust and ensuring sustainable adoption.

Clinicians generally recognize the value of AI in psychiatry. A global survey of 791 psychiatrists from 22 countries indicated cautious optimism, with many anticipating gains in efficiency and accessibility [71]. Yet nearly all emphasized that empathy and ethical judgment must remain the responsibility of human providers [68]. Interviews with both psychiatrists and primary care physicians confirmed this sentiment, praising AI’s potential to ease administrative burdens while voicing concerns about transparency, legal liability, and inherent algorithmic bias [72]. Interpretability has emerged as a critical factor for adoption. Clinicians are more likely to trust AI systems that achieve at least 85% predictive accuracy and offer clear, explainable outcomes to guide treatment [73]. In contrast, opaque “black-box” models that increase workload or require additional data entry are met with skepticism. Digital literacy also plays a key role: Studies found that fewer than one-third of mental health professionals had formal training in digital or AI technologies, with most experience limited to telepsychiatry [71, 74]. This highlights the need for comprehensive training in data analysis, ethical AI use, and privacy safeguards to ensure responsible implementation.

Beyond digital literacy, clinician preparedness for AI integration requires practical competence and ethical awareness. Focused training programs should equip psychiatrists and other mental-health professionals to interpret AI outputs, recognize potential bias, and integrate algorithmic insights with clinical judgment. Interdisciplinary collaboration with data scientists and ethicists can further build confidence in responsible adoption while ensuring that AI remains a supportive tool that complements, rather than replaces, human expertise and empathy [75]. In resource-constrained settings, AI-driven triage tools offer a promising approach to addressing the mental health workforce gap. A study in New South Wales, Australia, trained a supervised learning algorithm on emergency triage records, demographics, and presenting symptoms to predict inpatient psychiatric admissions [76]. The model provided interpretable insights, identifying age and triage category as key predictors and highlighting areas where resources could be effectively allocated [76]. This mirrors earlier progress seen in trauma care in LMICs, where ML triage models have outperformed traditional tools like the Kampala Trauma Score in predicting mortality and guiding admission decisions [77]. Similarly, AI-driven voice analysis is gaining traction as a scalable support mechanism. In a mental health helpline trial, a DL model accurately triaged callers in crisis with over 90% accuracy, doing so without continuous human supervision [70]. Advances are also emerging in multimodal AI systems that combine audio and text inputs. In one study analyzing over 14,000 helpline call segments, a model using long short-term memory (LSTM) networks achieved a 0.87 area under the curve (AUC) in detecting high-risk conversations, supporting the feasibility of continuous automated risk assessment in crisis lines [78]. Together, these innovations highlight how AI can strengthen psychiatric care systems by streamlining workflows, enhancing decision-making, and extending support in settings with limited resources. By prioritizing urgent cases, reducing clinician burden, and optimizing resource allocation, AI-driven tools offer a scalable pathway to closing mental health care gaps, particularly in underserved regions (Table 1). While AI offers transformative opportunities, it should be regarded as an assistive technology that augments but does not replace the expertise of mental health professionals. The optimal model combines AI’s analytical precision with the clinician’s contextual understanding, empathy, and ethical reasoning. Human oversight remains essential for interpreting algorithmic outputs, validating predictions, and maintaining therapeutic trust. Integrating AI with clinical judgment thus represents the most sustainable path toward improving psychiatric diagnosis and care quality [79].

Integrative applications of artificial intelligence (AI), predictive analytics, and personalized psychiatry in mental health diagnosis and treatment.

| Theme/Focus area | AI/Technology application | Psychiatric impact | Evidence/Example | Citation |

|---|---|---|---|---|

| Stigma, access, and equity | Predictive analytics for population-level needs | Target underserved groups, allocate resources, and reduce inequity | Treatment gaps in LMICs remain high; predictive tools may improve targeting | [60] |

| AI-driven triage | ML models using triage and demographic data | Early identification of high-risk patients in emergency departments | ML predicted psychiatric admissions with key features like triage score, age | [76] |

| Multimodal deep learning for risk detection | Integration of voice + text via LSTM networks | Enhanced detection of high-risk mental health interactions | Model trained on 14,000+ hotline calls, AUC = 0.87 | [78] |

| AI in resource allocation | Mortality prediction in trauma to inform psychiatric adaptation | Better triage protocols in low-resource psychiatric settings | ML outperformed traditional trauma scores in LMICs | [77] |

LMICs: low- and middle-income countries; ML: machine learning; LSTM: long short-term memory; AUC: area under the curve.

Despite advances in pharmacological and psychotherapeutic treatments, substantial gaps persist in both psychiatric care and research. The treatment gap reflects the limited access that many individuals with mental disorders have to adequate and timely care, while the research gap highlights the disconnection between routine clinical practices and approaches grounded in strong scientific evidence [16]. Bridging these gaps requires sustained research efforts aimed at generating robust evidence and translating findings into practice. Although psychiatric practice data have proven useful in some contexts, more comprehensive studies are still necessary to guide future care [80, 81]. The development and application of AI in psychiatry face several major challenges. These include the establishment of clear legal and regulatory frameworks, rigorous validation of AI models, sustainable funding, and seamless integration into clinical workflows. Thorough validation is especially critical to ensure AI systems are safe, accurate, reliable, and clinically meaningful. A particular priority is the design of interpretable AI systems capable of delivering clear, actionable insights for both clinicians and patients [25]. However, current AI models remain limited by narrow training datasets, poor generalizability, and a lack of transparency, underscoring the need for frameworks that enhance interpretability and equitable performance across diverse populations [82].

Concerns about generalizability remain pervasive. Many AI models depend on large, well-curated datasets, yet psychiatric populations are often underrepresented in such data. This raises the risk of reinforcing bias, where biased outputs feed back into clinical practice, perpetuating inaccuracies in diagnosis and treatment [83]. Addressing this issue requires a deeper investigation into discrepancies between real-world data and the populations typically studied in research [84]. Emerging opportunities lie in multimodal data integration, which combines diverse inputs such as clinical records, genomics, and neuroimaging. This approach has enhanced diagnostic precision and enabled personalized treatment strategies. For example, imaging genomics has revealed complex interactions among genetic, environmental, and developmental factors that influence psychiatric outcomes, benefiting from large-scale datasets that improved validity and generalizability [85]. Nevertheless, the lack of transparency and interpretability continues to undermine clinician trust. Explainable AI is essential for allowing clinicians to scrutinize, refine, and complement machine-generated decisions, ensuring that human judgment remains central to care. Prioritizing explainability not only fosters accountability but also strengthens healthcare outcomes [86].

In LMICs, AI offers significant potential to reduce care gaps by improving diagnostic accuracy and treatment delivery. Mental health professionals in these settings often rely on subjective assessments, but AI could support more standardized and evidence-based approaches. However, most ML models depend on large, continuously updated datasets—resources that remain limited in many LMICs, thereby constraining their scalability and implementation [87, 88]. Taken together, these challenges highlight the need for future research to focus on bias mitigation, data diversity, explainability, regulatory alignment, and equitable deployment. Only by addressing these barriers can AI fulfill its promise of advancing psychiatric care in both high-resource and resource-constrained settings.

AI holds transformative potential in psychiatry, enabling advances in diagnosis, predictive analytics, personalized therapy, and scalable interventions. Through tools such as multimodal data integration, digital phenotyping, and NLP, AI can uncover complex biological and behavioral patterns while enhancing treatment precision. However, challenges persist, including algorithmic bias, limited interpretability, ethical concerns related to privacy, consent, and equity. Therefore, the future of psychiatry depends on a collaborative human-AI model in which algorithms provide analytical precision and decision support, while clinicians contribute empathy, contextual understanding, and ethical judgment. Crucially, AI should serve as an assistive partner and not a substitute for professional expertise. Psychiatric evaluation must never rely on self-diagnosis through automated or online platforms, as mental disorders involve intricate biological, psychological, and social factors that require qualified clinical assessment and therapeutic oversight. The professional oversight of AI applications is vital in safeguarding patient welfare and upholding the fundamental human dimension of psychiatric care.

AI: artificial intelligence

ASD: autism spectrum disorder

CBT: cognitive-behavioral therapy

DL: deep learning

EEG: electroencephalogram

EHRs: electronic health records

FHIR: Fast Healthcare Interoperability Resources

GBD: global burden of disease

LMICs: low- and middle-income countries

ML: machine learning

NLP: natural language processing

PTSD: post-traumatic stress disorder

RL: reinforcement learning

WHO: World Health Organization

AI-Assisted Work Statement: During the preparation of this work, the authors used Paperpal (https://paperpal.com/) for language editing and academic paraphrasing to enhance clarity and readability. Figures were created by the authors using Microsoft PowerPoint based on published data cited in the figure legends. The authors take full responsibility for the intellectual content, scientific interpretations, data analysis, and conclusions presented in this publication.

OJO: Conceptualization, Methodology, Writing—review & editing. UOA: Conceptualization, Methodology, Writing—review & editing, Supervision. IN: Investigation, Data curation, Writing—original draft. AOA: Investigation, Data curation, Writing—original draft. FAA: Investigation, Data curation, Writing—original draft. SBA: Investigation, Data curation, Writing—original draft. NOO: Writing—original draft. TAO: Writing—original draft. DELP-III: Validation, Writing—review & editing, Supervision. All authors read and approved the submitted version.

The authors declare that they have no conflicts of interest.

Not applicable.

Not applicable.

Not applicable.

Not applicable, because no new data or databases were used in the preparation of this work.

This research received no external funding.

© The Author(s) 2025.

Open Exploration maintains a neutral stance on jurisdictional claims in published institutional affiliations and maps. All opinions expressed in this article are the personal views of the author(s) and do not represent the stance of the editorial team or the publisher.

Copyright: © The Author(s) 2025. This is an Open Access article licensed under a Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, sharing, adaptation, distribution and reproduction in any medium or format, for any purpose, even commercially, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

View: 4786

Download: 190

Times Cited: 0