Affiliation:

1Department of General Surgery, Hospital Universitari Sant Joan de Reus, 43204 Reus, Spain

Email: anna.trinidad11@gmail.com

ORCID: https://orcid.org/0000-0001-9970-7599

Affiliation:

2Department of Rheumatology, Hospital Universitari de Bellvitge, 08907 Barcelona, Spain

ORCID: https://orcid.org/0000-0001-9119-5330

Explor Digit Health Technol. 2025;3:101162 DOI: https://doi.org/10.37349/edht.2025.101162

Received: June 17, 2025 Accepted: August 13, 2025 Published: September 18, 2025

Academic Editor: James M. Flanagan, Imperial College London, UK; Atanas G. Atanasov, University of Vienna, Austria

Medicine is undergoing a deep technological transformation, with surgery on the cusp of this change, as technologies such as artificial intelligence (AI), augmented reality (AR), real-time imaging, and robotics converge to transform operative care. These innovations are now progressively being integrated into practice, driving precision surgery closer to reality. We aimed to assess how the convergence of AI, AR, real-time imaging, and robotics is advancing precision surgery and to outline the next wave of operative care. For doing this, we conducted a narrative perspective of publications that address AI-driven decision-making, AR-guided navigation, semi-autonomous robotics, and real-time imaging tailoring in surgical contexts. We observed that AI algorithms are expanding the potential of medicine by analyzing diverse data sets to optimize treatment strategies. AR-based navigation systems overlay digital anatomical information onto the surgical field, improving surgeon awareness and accuracy. Concurrently, AI-powered robotics are beginning to perform some surgical tasks semi-autonomously, potentially shortening procedure times and improving patient outcomes. Over time, the synergy between these disciplines may yield a new era of surgery: One where patient stratification guides operative decisions and where surgeons rely on data-driven systems for intraoperative feedback. This approach reinforces the principles of precision medicine and points toward a future in which surgery evolves hand in hand to improve clinical outcomes and patient safety. The synergy of data-driven surgery and personalized therapeutics brings a new era in precision medicine in which operative decisions may be dynamically tailored to the individual, promising greater safety and better clinical results.

Medicine is undergoing a significant transformation, driven by the exponential acceleration of technology. Much like the digital revolution of the late 20th century, when room-sized computers evolved into powerful devices we now carry in our pockets, healthcare is now entering a new era where innovation is reshaping every aspect of clinical practice, from diagnostic precision and therapeutic drug development to surgical intervention [1]. Technologies that once seemed confined to science fiction such as clinical artificial intelligence (AI) and robotic-assisted surgery, are emerging realities that are redefining medical procedures, pharmacological strategies, clinical decision-making, and the overall patient experience. These advances are not merely enhancing existing practices; they are reconfiguring the physician’s role, the structure of care delivery, and the dynamics of medicine. Among all medical specialties, surgery is poised to be one of the most impacted by technological innovations in the coming years [2].

In the face of such rapid and far-reaching change, anticipating the trajectory of surgical innovation is essential. What will the operating room of the future look like? How will surgeons adapt to systems increasingly driven by data, algorithms, and automation? This article offers a forward-looking perspective on how the ongoing technological revolution in medicine is expected to shape surgery.

This narrative perspective draws on publications indexed in MEDLINE between January 2015 and May 2025 that examine AI-driven decision-making, AR-guided navigation, semi-autonomous robotics, and real-time imaging in surgical settings. All search results were exported, de-duplicated, and curated in Mendeley Desktop 1.19.8, which served as the reference-management tool throughout the review process.

Precision medicine marks a fundamental shift in healthcare, moving away from traditional medicine, which relies on standardized protocols applied to broad groups of patients with the same diagnosis, assumes an average response to treatment, and overlooks individual biological variability toward a more tailored approach that aligns medical decisions, treatments, and interventions with the unique characteristics of each patient. Precision medicine represents the next step in that evolution. Rather than relying on a one-size-fits-all strategy, this paradigm integrates individual genetic, biomolecular, environmental, and lifestyle data to optimize therapeutic outcomes [3]. Extending these principles into the operating room, precision surgery is emerging as a transformative model that seeks to deliver highly targeted, patient-specific interventions that minimize tissue trauma, preserve physiological function, and accelerate recovery.

At the core of precision surgery is the integration of advanced technologies such as augmented reality (AR), real-time imaging, AI, and robotics (Table 1). These tools are enabling a new era of surgical care, one that is not only safer and more effective, but also deeply aligned with the unique anatomical, physiological, and even molecular profiles of individual patients (Figure 1).

Transformative technologies in surgery.

| Technology | Definition | Key characteristics | Current surgical benefits | Future integration |

|---|---|---|---|---|

| Augmented reality | Real-time overlay of digital information (3D models, annotations, or navigation cues) onto the surgeon’s field of view. | Holographic or projection systems. | Enhanced anatomical orientation, safer dissection planes, reduction in iatrogenic injury, improved training via shared visual cues. | Fusion with AI-driven segmentation, cloud-synchronized AR dashboards inside “smart” operating suites. |

| Real-time imaging | Intra-operative acquisition of dynamic visual data (ICG fluorescence, intra-op MRI, etc.) updated second-to-second. | High temporal resolution; integration with navigation platforms; quantitative perfusion and tissue-characterization metrics. | Immediate margin assessment, perfusion checks, adaptive resection strategies. | Feedback to robotic actuators and digital twins, automatically triggering alerts or micro-adjustments during critical steps. |

| AI-assisted surgery | Machine-learning algorithms that analyse images and records to support or automate surgical decision-making. | Deep-learning vision, predictive analytics, natural-language interfaces; self-improving models via continual learning. | Higher lesion-detection rates, automated safety alerts, personalized complication prediction, objective performance feedback. | Integrated across all surgical phases to support adaptive, patient-specific workflows under algorithmic oversight. |

| Robotic automation | Computer-controlled electromechanical systems that manipulate instruments inside the patient’s body. | Motion scaling, tremor filtration; evolving from surgeon-controlled to supervised autonomy. | Greater dexterity, reproducible precision, remote intervention capability, shorter learning curves for complex tasks. | Transition to context-aware, semi-autonomous task execution linked to AI vision and real-time imaging, with collaborative human-robot shared control. |

| Digital twins | High-fidelity computational replicas of an individual patient. | Multi-scale physics models blended with AI; continuously updated by video, force, bio-signals; supports real-time simulation. | Virtual rehearsal, “what-if” risk scoring, personalized implant sizing or ablation zones, objective documentation of intra-operative events. | Acts as the cognitive core of the OR: Functions as the cognitive hub of the operating room, enabling predictive, patient-specific control of surgical procedures. |

AR: augmented reality; ICG: indocyanine green; MRI: magnetic resonance imaging; AI: artificial intelligence; OR: operating room; intra-op: intraoperative.

The context of precision surgery may be applied to the different categories of surgery. Open surgery will remain fundamentally manual, but precision is enhanced by intra-operative fluorescence, laser-range scanning, and AI-driven optical tissue characterization that may delineate tumour margins in real time [4]. Laparoscopic surgery may gain an extra layer of precision through high-definition vision systems and computer-vision algorithms that may provide context-aware safety alarms [5]. Robotic-assisted surgery is expected to add motion scaling, allowing increased accuracy in confined spaces [6]. Computer-assisted robotic surgery introduces supervised autonomy for repetitive or high-dexterity tasks, guided by AI segmentation of live video feeds. As the transition of this, image-assisted robotic surgery fully integrates real-time imaging with robotic actuation and digital-twin modelling, thereby enabling closed-loop, data-driven adaptation of the operative plan on a moment-to-moment basis.

AR is revolutionizing surgical practice by elevating visualization, spatial orientation, and intraoperative decision-making [7]. By overlaying digital information, such as 3D anatomical models, surgical trajectories, and real-time imaging, directly onto the surgeon’s field of view. AR provides immersive, real-time guidance during complex procedures, potentially including the precise administration of localized therapeutic agents. AR-based navigation systems, for example, have demonstrated sub-millimeter accuracy in projecting critical structures onto the surgical field, improving spatial orientation and reducing the risk of iatrogenic injury [7]. Moreover, AR has been shown to enhance surgeon confidence and precision during delicate operations by providing virtual navigation aids that align with the patient’s anatomy [8]. AR platforms may allow surgeons to visualize and interact with holographic representations of patient-specific anatomy, facilitating better planning and execution of surgical interventions, and envisioning future applications in guiding targeted drug delivery [9].

Real-time imaging technologies provide dynamic, intraoperative visualization of anatomical structures during procedures. Tools such as intraoperative ultrasound, real-time magnetic resonance imaging (MRI), and fluorescence-guided imaging are increasingly used to delineate tumor margins, assess vascular integrity, and adapt surgical strategies in real time [10]. For example, indocyanine green (ICG) fluorescence imaging is widely applied in hepatobiliary and colorectal surgeries to assess tissue perfusion and detect bile leaks or metastatic lymph nodes with high sensitivity [11]. In neurosurgery, intraoperative MRI enables real-time visualization of brain shifts during tumor resection, allowing surgeons to update navigation systems and improve resection accuracy [12]. To enable robotic assistance in surgery, MRI-compatible platforms are constructed from non-ferromagnetic composites and rely on pneumatic or piezoelectric actuation with fiber-optic feedback, thereby preserving sub-millimetric precision without distorting the magnetic field [13]. Advanced imaging platforms integrated with AI algorithms can highlight pathological tissue in real time based on texture, color, or fluorescence signals, supporting more precise excision and reducing operative time [14].

AI has become an essential element in the evolution of precision surgery, impacting every stage of the surgical process, from preoperative planning to intraoperative execution and postoperative management. By utilizing its ability to process large volumes of complex data, AI supports a more integrated and personalized approach to surgical care, in line with the core goals of precision medicine.

Clinical applications of AI are rapidly expanding across the surgical pathway (Figure 2). In the diagnostic phase, AI-assisted colonoscopy systems have significantly improved the detection of adenomas and polyps in colorectal cancer, surpassing conventional methods and enabling earlier therapeutic interventions [15]. AI further enhances diagnostic accuracy by combining radiological and histopathological data to evaluate tumor aggressiveness. In preoperative planning, AI tools used in orthopedic surgery improve implant positioning in hip and knee arthroplasty [16], while in hepatic and neurosurgical procedures, AI-driven 3D segmentation allows for precise identification of anatomical landmarks and surgical margins. Intraoperatively, systems are integrated with robotic platforms and AR interfaces to support real-time visualization of subsurface anatomy and guide precise surgical actions [17]. Additionally, “surgical black box” platforms allow AI to analyze intraoperative video, audio, and device data, identifying performance deviations and improving surgical safety [18]. AI-driven imaging analysis also aids in distinguishing between healthy and pathological tissue during resection, while in endoscopy, it significantly increases polyp detection through automated visual alerts [15, 18]. Postoperatively, machine learning models that incorporate clinical, demographic, pharmacogenomic, and laboratory data have demonstrated superior performance in predicting complications such as infection, hemorrhage, and readmission. Furthermore, these models can optimize personalized drug regimens, predict adverse drug reactions, and tailor analgesic strategies, enabling more responsive and personalized perioperative care strategies [19].

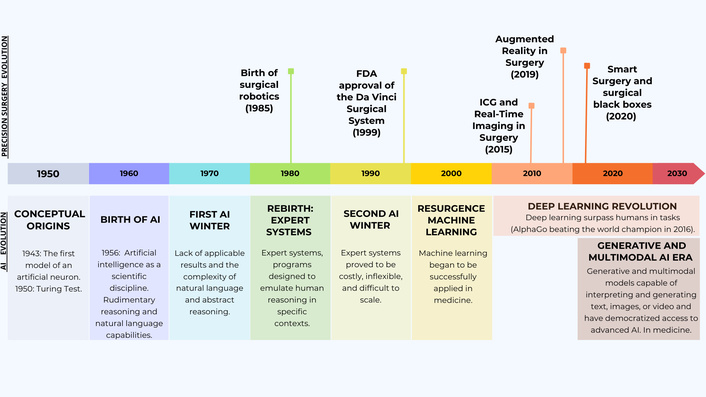

The historical timeline of augmented reality, real-time imaging, artificial intelligence (AI), and robotics.

Over the past decade, minimally invasive surgery has undergone a rapid evolution with the advent of robotic-assisted techniques (Figure 2). The da Vinci platform emerged as a pioneering system and, since its approval by the Food and Drug Administration (FDA), has been widely adopted across multiple surgical specialties [20]. Robotic surgery has been associated with improved clinical outcomes, including reduced intraoperative blood loss and transfusion requirements, shorter hospital stays, and a lower rate of complications compared to traditional open or laparoscopic approaches [20]. Subsequently, other multiport robotic surgical systems have been developed, highlighting the ongoing expansion and innovation in this field [21].

Very recently, robotic surgery marked a new historic milestone when a thoracic surgeon performed a lung cancer operation from a robotic console in China, while the patient was located over 8,000 kilometers away in Romania [22]. The procedure was conducted using a 5G connection that enabled near real-time synchronization between the surgeon’s commands and the movements of an uniportal robotic arm. Through a single incision, the right upper lobe of the lung was successfully resected. Remarkably, the latency across this intercontinental connection was only 0.125 seconds, almost imperceptible [22].

It is frequent that clinicians and surgeons lag behind the latest developments in technology, including robotics; any innovation must first undergo extensive medical, ethical, and legal evaluation before it can be safely implemented in clinical practice. To understand where robotic surgery is heading, it is essential to look beyond the operating room and examine the broader landscape of robotics, where emerging trends often signal the future direction of surgical innovation.

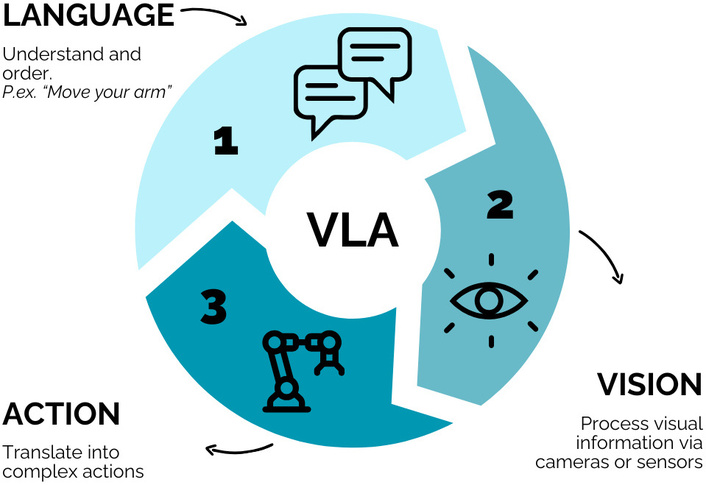

One of the most recent breakthroughs is the potential integration of AI into robotics. Traditionally, robots have been trained to perform specific tasks through direct human instruction. For instance, a robot could be programmed to transfer an object from one hand to another, but it would not be able to respond to a greeting unless explicitly coded to do so. This paradigm has changed thanks to AI systems by enabling robots to adapt autonomously to new situations, objects, and environments without the need for prior task-specific training. This is possible through the integration of natural language understanding, visual perception, and the execution of precise physical actions [23]. This concept has been further advanced by introducing the notion of a visuo-linguistic actuator (VLA) as a core component of its system [24]. A VLA is an architecture that combines three key capabilities: vision (visuo), language (linguistic), and physical action (actuator). The goal is to create a unified AI model that enables robots to interact with the world in a more natural, generalist, and context-aware manner (Figure 3). Their latest model has been trained to understand and follow natural language instructions (e.g., “move the light”), process visual information from the environment (via cameras or sensors) and translate these multimodal inputs into complex physical actions (such as moving a robotic arm or grasping objects), hinting at future capabilities for precise, AI-guided surgery, including administration of intra-surgery drugs [24].

How robots interact with the world by using VLA (visuo-linguistic actuator) approach.

In essence, these systems go beyond executing pre-programmed commands; they are capable of inferring about what they see and hear, and then deciding how to act accordingly. This represents a significant step toward more autonomous and intelligent robotic systems that could reshape the way surgical robotics is practiced. In this sense, AI is rapidly becoming an integral component of robotic surgery, enhancing the surgeon’s capabilities through data-driven insights and emerging autonomous functionalities. One of the most promising developments is the incorporation of computer vision and AI algorithms to provide real-time intraoperative guidance [5]. For example, by showing suggestions, alerts, or the delineation of anatomical structures identified automatically by AI systems as the surgeon operates. These recommendations are expected to become significantly more accurate and context-aware in the coming years. This digital “second look” can highlight potential risk zones or guide dissection planes, acting as a silent safety assistant throughout the procedure [25].

Concerning the level of automation, although the surgeon retains primary control, various functions are being developed to delegate repetitive or high-precision tasks to the robotic system. AI may soon enable robotic platforms to perform simple surgical actions semi-autonomously, such as incision closure or knot tying [6]. Prototype systems already exist in which the surgeon positions the instruments and, with a single command, the robot completes the suture autonomously. Notably, in 2022, researchers at Johns Hopkins University achieved a significant milestone by performing the first fully automated laparoscopic surgery, where an autonomous robotic system independently executed a bowel anastomosis in an animal model without human intervention [26]. Another innovative application of AI lies in performance analysis and surgical training. Newer platforms are incorporating real-time analytics and collaborative tools. These systems can collect objective data from each procedure, including instrument movement, applied forces, and task efficiency, and provide personalized feedback to the surgeon, promoting continuous technical improvement [27].

The future of robotic surgery is expected to be shaped by major advances. The fifth-generation da Vinci system aims to incorporate a computing system that is significantly more powerful than its predecessors, offering up to 10,000 times the processing capacity of the previous model [27]. This leap in performance is expected to enable future upgrades that may be retrofitted into existing da Vinci platforms, further extending their capabilities [27]. Although still in its early stages, this convergence of AI and robotic systems points toward a future in which automation and remote surgery could significantly expand access to high-quality surgical care, while maintaining rigorous standards of precision and patient safety.

Medicine is entering a new era defined by autonomy, personalization, and the integration of intelligent systems. Operating rooms are expected to evolve into the so-called Cognitive Operating Ecosystems (COEs), intelligent environments that integrate an AI inference engine, collaborative robotics, and immersive mixed-reality workspaces [2, 28]. These ecosystems will continuously stream multimodal sensor data, including high-resolution video, robotic inputs, environmental parameters, and physiological signals into a patient-specific digital twin [29]: A high-fidelity computational replica of the patient that enables virtual procedure planning, rehearsal, and real-time prediction of complications. This will allow for real-time “what-if” simulations and predictive risk scoring throughout the procedure [29]. Surgeons will interact with the digital twin through holographic dashboards that render probabilistic heat maps of critical anatomy and AI-generated decision cues [30].

However, as connectivity expands, so do the risks related to cybersecurity and operational governance. Because the next generation of operating rooms will link many devices, each connection becomes a potential entry point for attackers. To keep the system safe, a “zero trust” approach will be adopted: No user or device is trusted by default, logins are verified every time, and the network is segmented into small zones to prevent lateral movement by intruders [31]. Encrypted communication protocols and robust governance frameworks will be essential to monitor system performance, define safety metrics, and clarify responsibilities in semi-autonomous actions [32]. An independent ethics committee will play a critical role in ensuring that patient consent, data provenance, and transparency requirements are met.

These changes call for a redefinition of surgical training and accreditation. Surgeons of the future will need to develop competencies in data science as well as in human-machine interaction. Surgeons will transition from instrument controllers to cognitive-procedural strategists. Training will couple mixed-reality rehearsal environments. Trust-calibration protocols analogous to aviation crew-resource management will be incorporated to optimize human-AI teaming. Credentialing will migrate from volume-based metrics to performance-based badges linked to validated keyperformance indicators generated by the COE.

Future surgical innovation must also incorporate environmental considerations and promote more sustainable alternatives. This includes reducing the carbon footprint of operating rooms by optimizing energy usage, minimizing single-use plastics, and adopting greener sterilization methods. Additionally, manufacturers and healthcare systems should prioritize the development and procurement of surgical instruments and technologies that are designed for reusability, recycling, or lower environmental impact throughout their lifecycle. Integrating sustainability into surgical training and institutional policies will be essential to drive long-term change.

At the same time, telepresence and remote surgical mentoring may help bridge global disparities in access to specialized expertise. Advanced telecommunication technologies, including real-time video streaming, AR, and robotic-assisted platforms, enable experienced surgeons to guide or even perform procedures in geographically distant or underserved regions. This can enhance the quality of care in low-resource settings, facilitate skill transfer, and reduce the need for patient or provider travel, further contributing to environmental goals. Moreover, as global collaboration in surgery expands, virtual networks can foster ongoing education, mentorship, and cross-border research, ultimately improving surgical outcomes and equity worldwide.

Collectively, these developments outline a path towards an operating room ecosystem that is not merely more precise or personalized but fundamentally re-architected around data-centric autonomy, secure connectivity, and human-machine symbiosis.

While the exact trajectory of the latest developments in surgery remains uncertain, it seems clear that robotics and AI are here to stay. The operating theatre itself is evolving into ecosystems where AI engines, collaborative robotics, and mixed-reality workstations will become the foundational pillars of future surgical procedures. These technologies are not intended to replace physicians, but rather to augment our capabilities and unlock new dimensions of clinical and creative potential. The challenge and the opportunity lie in integrating these advances through an ethical, collaborative, and patient-centered lens.

AI: artificial intelligence

AR: augmented reality

COE: Cognitive Operating Ecosystem

ICG: indocyanine green

MRI: magnetic resonance imaging

VLA: visuo-linguistic actuator

During the preparation of this work, the authors used ChatGPT primarily to improve the clarity and readability of the text, and the DeepResearch tool to review literature. After using the tools, the authors carefully reviewed, edited, and verified the content, and took full responsibility for the final version of the manuscript.

ATB: Conceptualization, Investigation, Writing—original draft, Writing—review & editing. DB: Conceptualization, Investigation, Writing—review & editing. Both authors read and approved the submitted version.

The authors declare that they have no conflicts of interest for this manuscript.

Not applicable.

Not applicable.

Not applicable.

Not applicable.

Not applicable.

© The Author(s) 2025.

Open Exploration maintains a neutral stance on jurisdictional claims in published institutional affiliations and maps. All opinions expressed in this article are the personal views of the author(s) and do not represent the stance of the editorial team or the publisher.

Copyright: © The Author(s) 2025. This is an Open Access article licensed under a Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, sharing, adaptation, distribution and reproduction in any medium or format, for any purpose, even commercially, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

View: 4997

Download: 228

Times Cited: 0